Blogger Robot.txt: Optimize Blog for Search Engine

Do you want to make your Blogger site more visible to search engines and attract more organic traffic? If so, you need to optimize your site for crawlers and indexing.

Crawlers are programs that scan the web and collect information about websites. Indexing is the process of storing and organizing the information collected by crawlers in a database.

By optimizing your site for crawlers and indexing, you can help search engines understand what your site is about, find your pages faster, and rank them higher in the search results.

In this guide, I will show you how to optimize your Blogger site for crawlers and indexing using two tools: Custom Robot Header Tags and ROBOT.TXT.

Custom Robot Header Tags are tags that you can use to control how crawlers access and index your site. ROBOT.TXT is a file that you can use to communicate with crawlers and tell them which pages to crawl and which ones to ignore.

Let's get started!

What are Custom Robot Header Tags and How to Use Them?

Custom Robot Header Tags are tags that you can add to your Blogger site to instruct crawlers on how to treat your pages. For example, you can use these tags to:

- Allow or disallow crawlers from indexing your pages

- Specify whether crawlers should follow the links on your pages

- Prevent crawlers from showing a cached version or a snippet of your pages

- Disable the translation of your pages in different languages

- Exclude your images from being indexed by crawlers

- Set an expiration date for your pages to be de-indexed

To use Custom Robot Header Tags on your Blogger site, follow these steps:

1. Go to Settings > Crawlers and indexing in your Blogger dashboard.

2. Enable Custom robots header tags option.

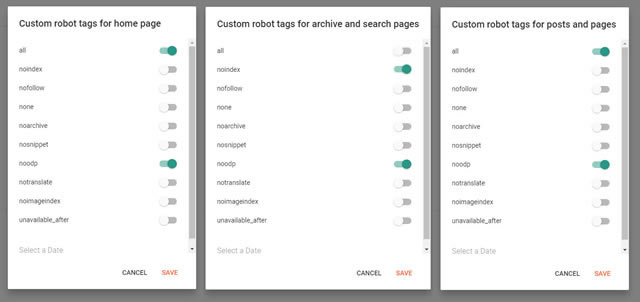

3. Select the tags that you want to apply to your site. You can choose different tags for your homepage, archive pages, posts, and pages. Here is a brief explanation of each tag:

- all: This tag allows crawlers to access and index all the elements of your site. If you enable this tag, you give crawlers full permission to index everything.

- noindex: This tag prevents crawlers from indexing your site. If you enable this tag, you make your site invisible to search engines.

- nofollow: This tag tells crawlers not to follow the links on your site. If you enable this tag, you make all the outgoing links on your site nofollow.

- none: This tag is a combination of noindex and nofollow tags. It tells crawlers not to index or follow your site.

- noarchive: This tag controls whether crawlers can show a cached version of your site in the search results. A cached version is an updated copy of your site that crawlers store and use to serve your site in case of downtime. If you enable this tag, you prevent crawlers from showing a cached version of your site.

- nosnippet: This tag controls whether crawlers can show a snippet of your site in the search results. A snippet is a short summary of your site that helps users get an idea of what it is about. If you enable this tag, you prevent crawlers from showing a snippet of your site.

- noodp: This tag prevents crawlers from using information from the Open Directory Project (ODP) or Dmoz to describe your site. ODP is a web directory that contains information about websites submitted by users. If you enable this tag, you tell crawlers to use only the information that you provide on your site.

- notranslate: This tag disables the translation of your site in different languages. If you enable this tag, you tell crawlers not to offer users the option to translate your site.

- noimageindex: This tag prevents crawlers from indexing the images on your site. Images are an important part of blogging and can increase the organic traffic to your site. If you enable this tag, you tell crawlers not to index your images.

- unavailable_after: This tag sets an expiration date for your site to be de-indexed. If you enable this tag, you tell crawlers not to index your site after a certain time.

4. Click Save.

Here is an example of how to set up Custom Robot Header Tags for your Blogger site:

What is Blogger ROBOT.TXT and How to Add It

ROBOT.TXT is a file that you can add to your Blogger site to communicate with crawlers and tell them which pages to crawl and which ones to ignore. For example, you can use this file to:

- Allow or disallow crawlers from crawling certain pages or directories on your site

- Specify the location of your sitemap

- Request a delay between crawler visits to avoid overloading your server

To add ROBOT.TXT to your Blogger site, follow these steps:

- Go to Settings > Crawlers and indexing in your Blogger dashboard.

- Enable Custom robots.txt option.

- Paste the following code in the text box:

User-agent: *Disallow: /searchSitemap: https://yourblogname.com/sitemap.xml

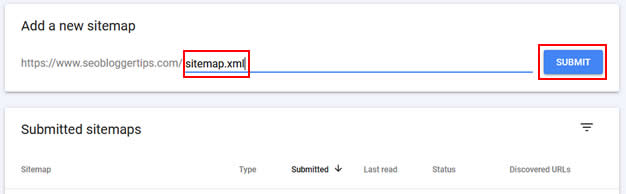

How to Submit Your Sitemap to Google Search Console

sitemap.xmlsitemap-pages.xml

Conclusion

Optimizing your Blogger site for crawlers and indexing is an essential step for improving your SEO and increasing your organic traffic. By using Custom Robot Header Tags and Blogger Robot.txt, you can control how crawlers access and index your site. By submitting your sitemaps to Google Search Console, you can help crawlers find and index your pages faster.

I hope this guide was helpful for you. If you have any questions or feedback, please leave a comment below.

Read also: How to Get Google to Index Your Blogger Site Faster

Editor's Note: This post was originally published in January 2022 and has been completely revamped and updated for accuracy and comprehensiveness.

Can I use two properties on a search console

Yes, but only for different websites. It is not recommended for the same website, www.yoursite.com, and yoursite.com. This way, you create duplicate content, which can be bad for SEO.

please my sitemap is not showing "couldn't fetch" please why is it like that?

Please I have added the site map and it works, but in the page's, is showing zero. Can you teach me how to create pages for blogger